Demystifying chatbot containment rates with analytics

Demystifying chatbot containment rates with analytics

Chatbot owners want to know how well their bot is performing and often turn to containment as a north star to evaluate performance.

But is it really all it’s cracked up to be? Let’s take a look at chatbot containment rates in detail to understand where this common metric is useful, as well as where it is falls short.

What is Chatbot Containment?

“Containment” is a metric that, in essence, looks at how well a chatbot is automating conversations. That is, the bot’s ability to help customers get answers to their burning questions, without having to escalate to a live agent.

A user interaction with a chatbot is considered “contained” when the user successfully resolves their issue or completes their task without needing to be transferred to a human agent. The conventional wisdom is that this indicates the chatbot was able to provide the necessary information or perform the required actions entirely on its own.

In turn, a chatbot or contact center containment rate is the percentage of total chatbot interactions that are successfully contained.

For businesses with conversational AI programs, containment rate is also often referred to as “deflection” or “case deflection,” a term that stems from the contact center, since it refers to the ability of a chatbot to divert incoming calls away from live agents to automated services.

The Importance of Chatbot Containment Benchmarks

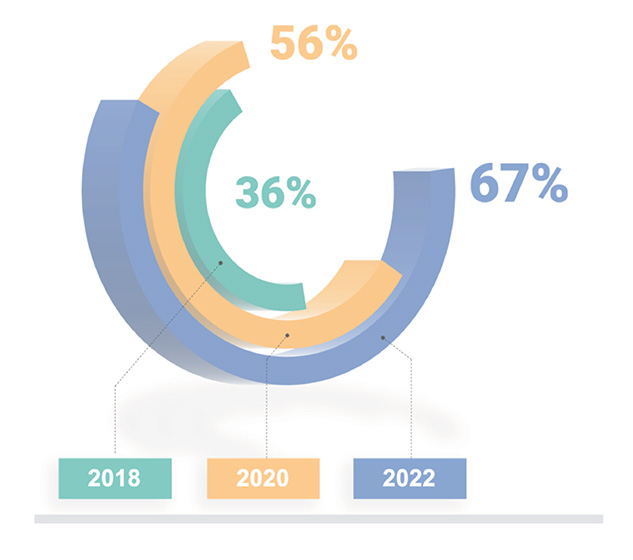

Containment is often considered a positive outcome, and as a chatbot owner, it is likely one of your key performance indicators (KPIs). Salesforce, in their previous State of Service report, documents the growing trend for companies to see case deflection as a core KPI:

- 2018: 36% of companies reported on it

- 2020: 56% of companies

- 2022: 67% of companies

Indeed, when Gartner predicted that chatbots would become a primary customer service channel by 2027, containment was identified as an area that must be improved. It’s no wonder that companies are proud when they can announce containment rates in the range of 70, 80, or even 90%.

Unfortunately, while a high call center containment rate sounds like a noble goal, it doesn’t quite tell the full story of how well a chatbot is automating customer conversations.

Look beyond the north star of containment rates with deeper bot analytics

While the business and chatbot creators might consider it a job well done when they achieve a high containment metric, there’s something critical missing from this simple measurement: the customer experience is not taken into account. In fact, a closer look at “contained” interactions might uncover an altogether different story of your customers’ experiences.

If your business is investing in automating the customer experience, you need to understand what is happening behind the scenes and beyond the confines of the containment metric.

Consider, for example, a few scenarios where the conversation is automated, yet the customer has an unfulfilling experience:

- A customer abandons the conversation with the chatbot, without finding a useful resolution to their query.

- A customer gets caught in a loop of misunderstanding, before jumping ship to find help elsewhere.

- A customer “shouts” at the bot for help, which fails to escalate the customer because it doesn’t understand their plea.

- The customer finds a solution, but it might not be the optimal or right one.

Additionally, customers may complete a given intent, resulting in a high containment rate, only to pick up the phone to engage a live agent because they were unable to get the answer they were looking for. This bypass is yet another way we may lose the details on “true” containment.

How containment should be considered

When assessing the value of containment, automation should only be one of the values considered. To understand “true containment” you need to consider additional factors:

- Does the user leave the chat conversation and escalate to a live agent, or did they abandon the conversation altogether?

- Was there any feedback when they exited the chat?

- Was there negative (or positive) sentiment expressed during the chat?

- Does the chatbot fail to reach a useful conclusion? (For example, when a customer asks a question, and the bot is unable to interpret it accurately and so provides a misleading response.)

- Do false positives lead the customer to unrelated responses?

Measuring containment in this way provides a more holistic or nuanced view, with better insight into whether the bot is performing effectively or needs to be improved.

Measuring “True Containment” with chatbot analytics

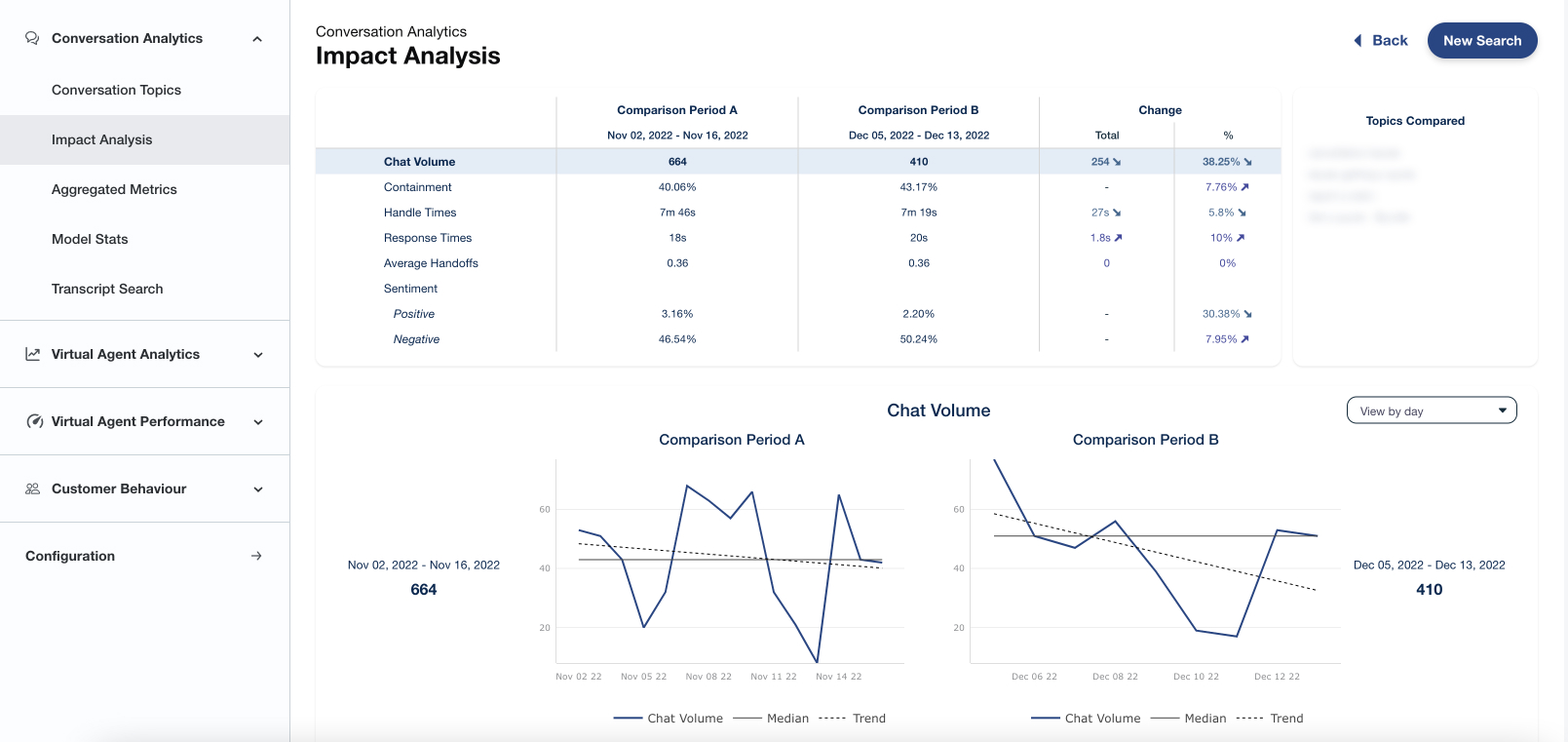

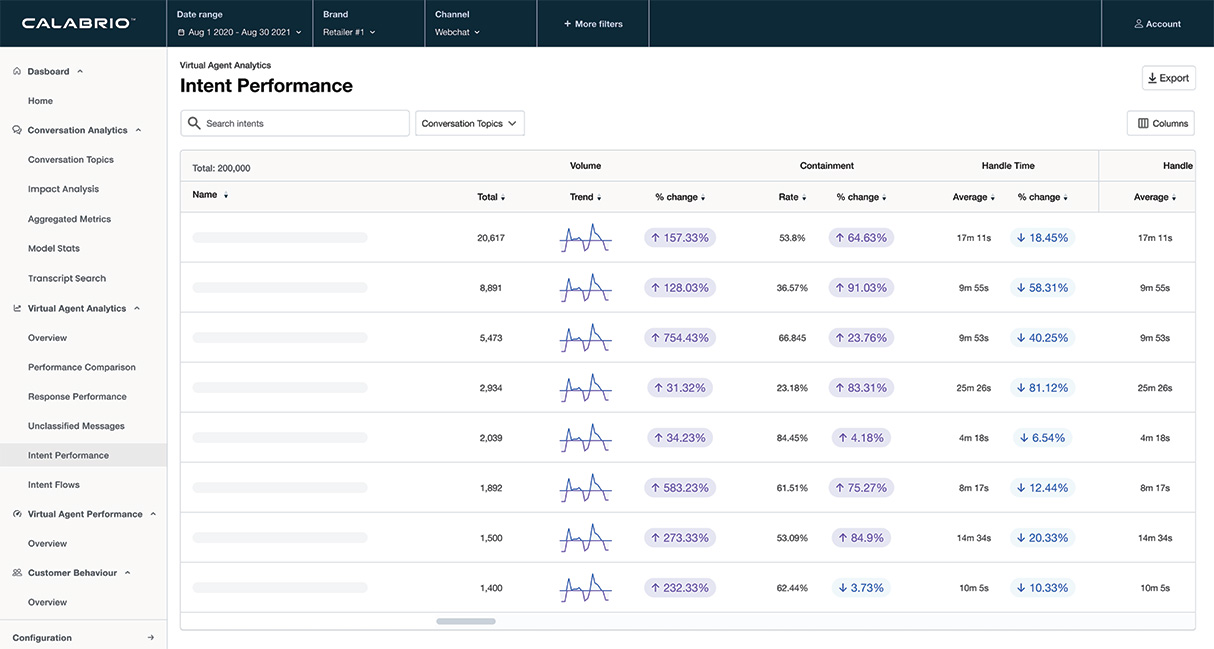

Many chatbot platforms produce a value for containment rate as a key performance indicator. However, a more advanced chatbot analytics platform will give you a more accurate measure of how well the bot is automating the conversation.

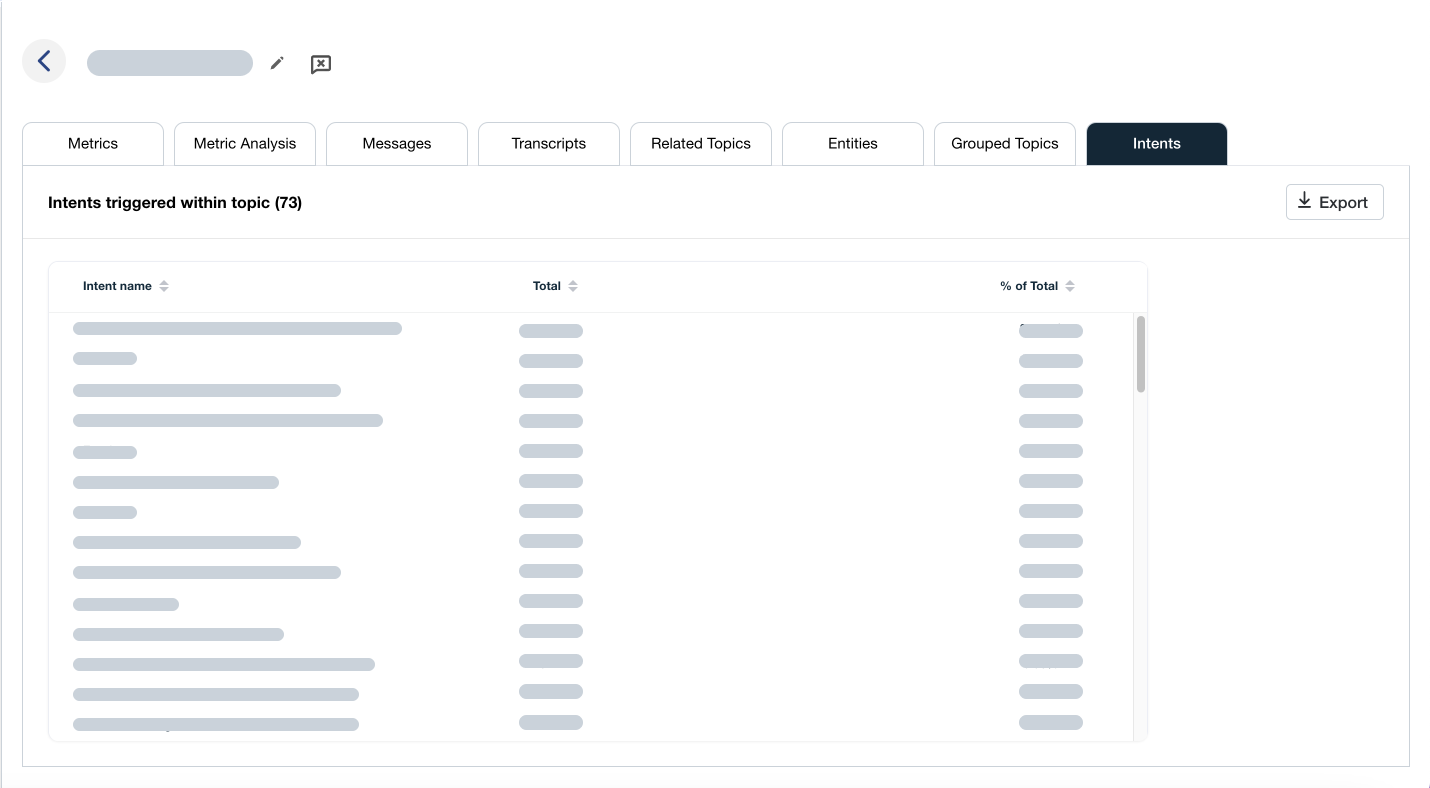

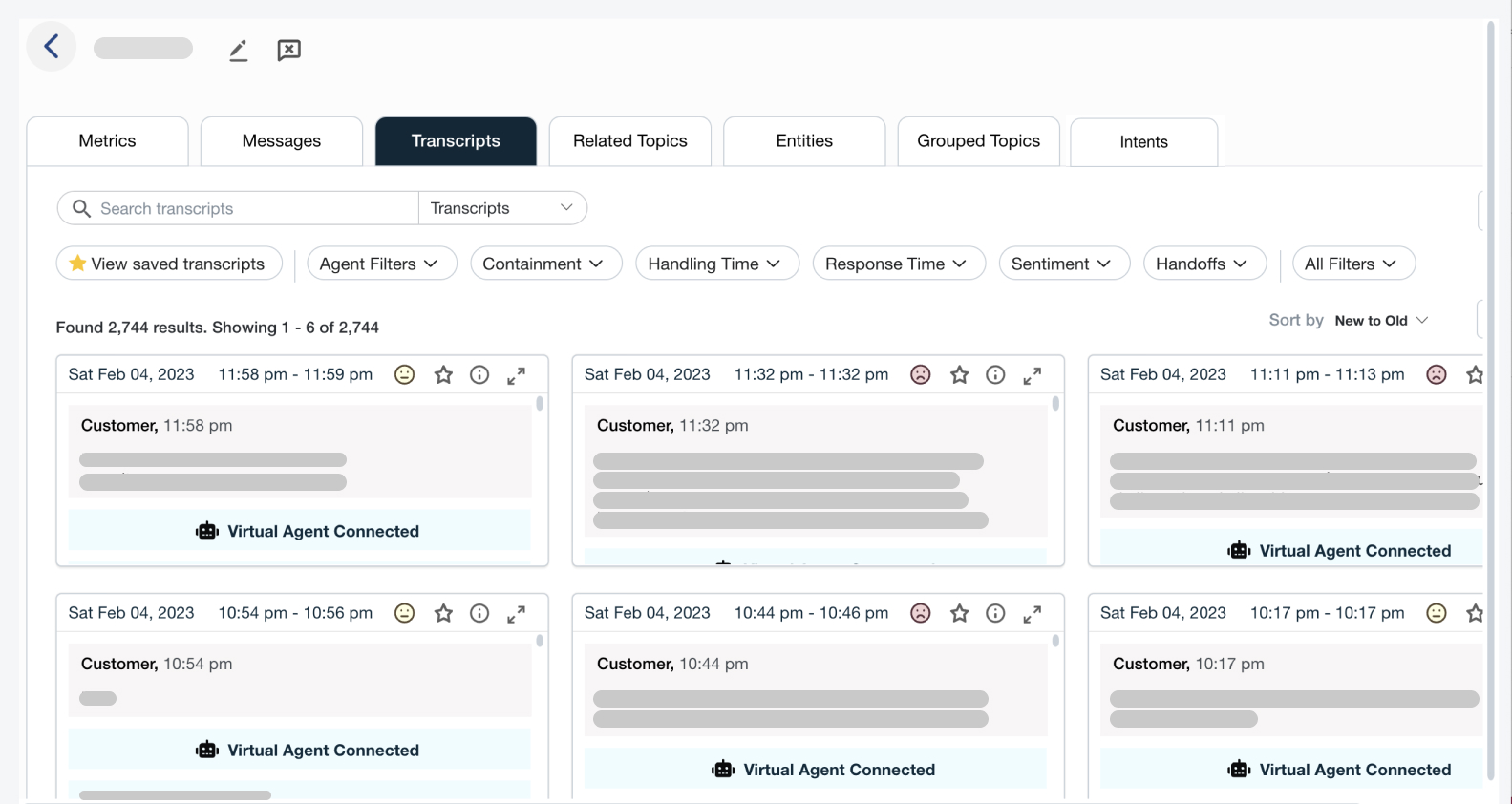

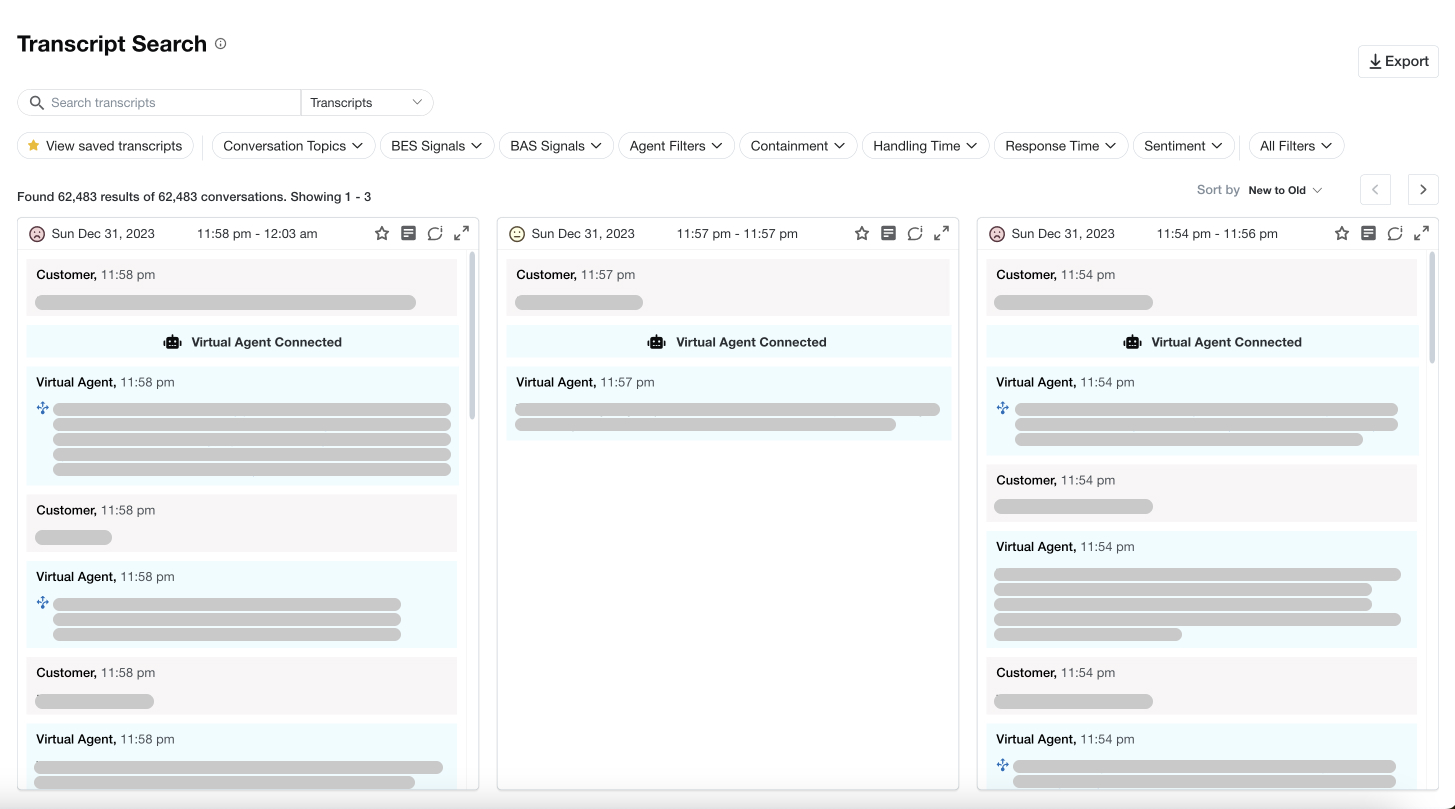

The right chatbot analytics tools will not only capture whether a conversation reached an intended end goal but will also look at other signals found in the data, including escalation, abandonment, explicit feedback, AI-based sentiment analysis, false positive detection, and containment on topics that the bot was not intended to handle. All told, it will score individual conversations and topics, and produce an overall score while also allowing you to understand bot performance on a more granular level.

At Calabrio, we refer to this essential chatbot performance metric as the Bot Automation Score, or BAS. With the BAS, product owners can fill in the gaps to get a more complete picture of how well their bot drives customer experiences without escalation to an agent.

As conversational AI expands with more opportunities to engage your customers, being able to accurately assess containment is key to knowing what is happening under the hood—and ensuring that your customers enjoy smooth experiences and greater satisfaction.

Want to gain a fuller understanding of how your bot handles customer interactions? Understand the impact Calabrio Bot Analytics by signing up for a free demo today.