Chatbot intent discovery, done right

Chatbot intent discovery, done right

Now that your virtual agent is live, expectations are that the bot is prepared to handle everything. Customers will ask their question, get the right answer every time; conversations will be fully automated and impeccable, and everyone’s happy.

But, let’s be honest, that’s not actually how it goes, right?

To imagine every scenario and design deep and meaningful content for every interaction is frankly not possible yet, even despite your best efforts. Customers ask questions in their own different and unique ways, or they ask about things the bot has never before encountered and it doesn’t have a response: New products? Check. Policy updates? Check. More detail on existing responses? Check.

The answer to this nagging but common issue—or at least the potential answer—is intent discovery. But how do you drive effective discovery for your bots in a way that’s scalable and sustainable?

What is intent discovery? And why is central to bot improvement and evolution?

The goal of an intelligent virtual agent is to enable customer self-service, guiding customers to the right answer, without needing agent escalation. But how can it do that when customers ask questions it can’t handle?

Conversational AI is a lot of things, but it’s not magic—even if the latest models can do some pretty magical things. That means the important responsibility of making sure your virtual agent is trained and ready to respond falls to the chatbot team.

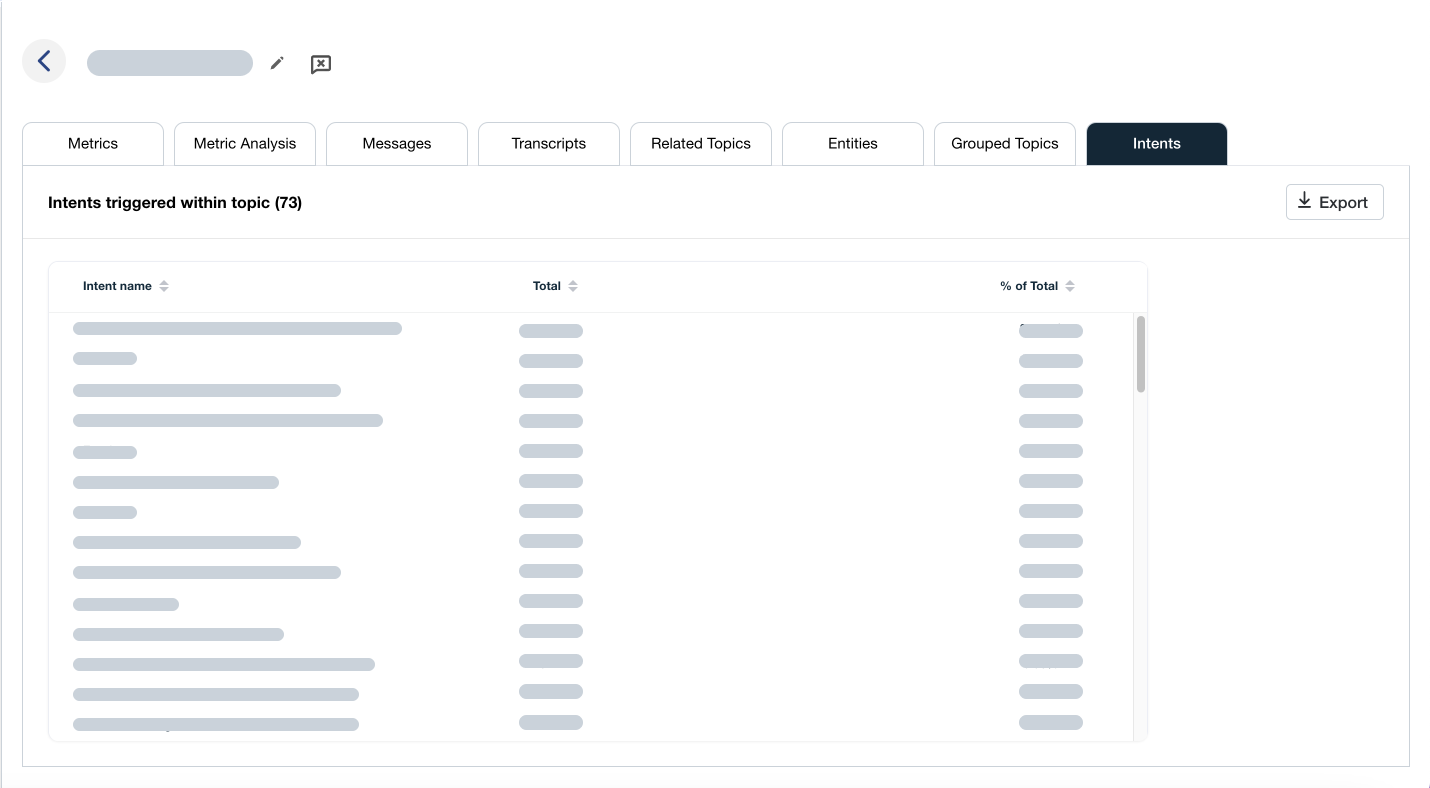

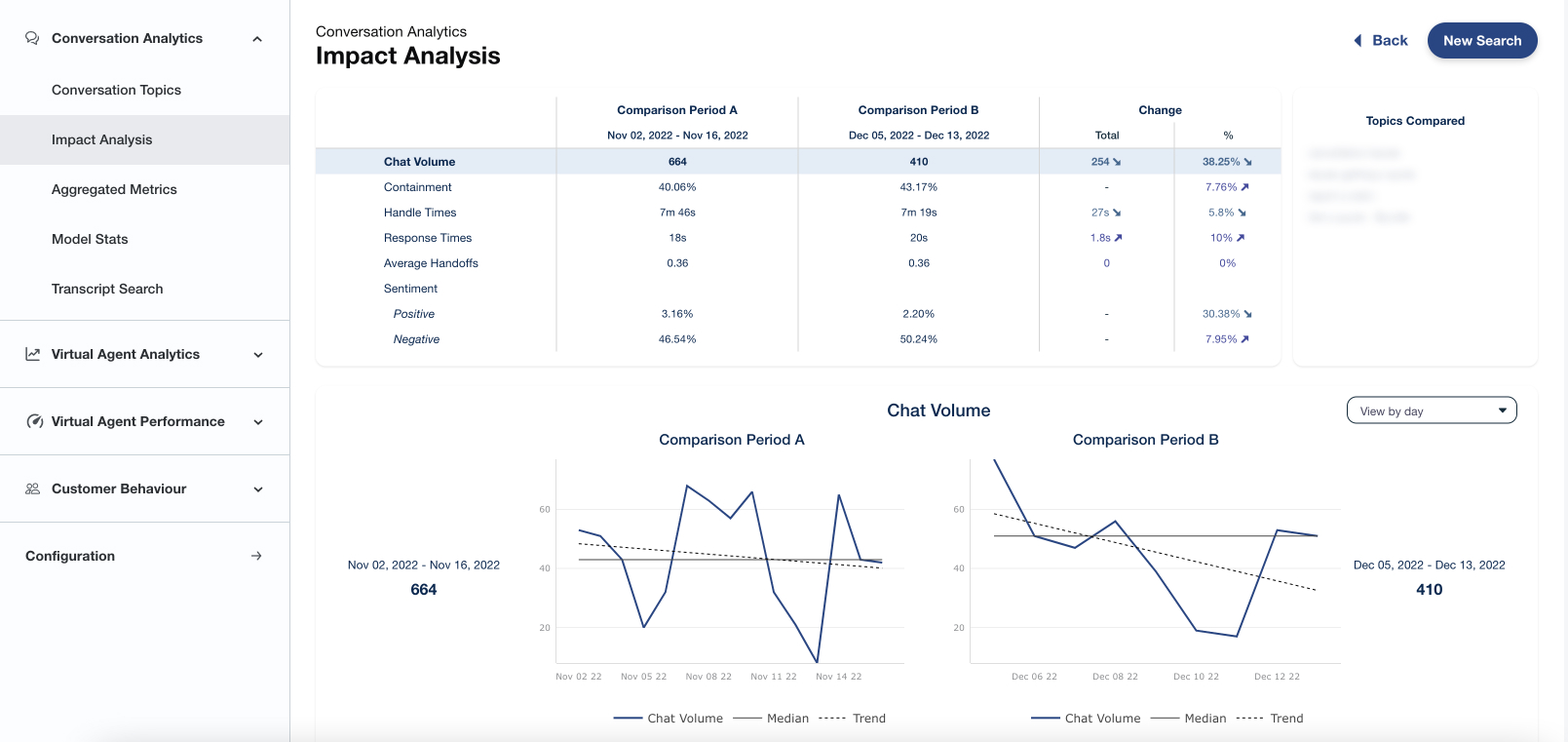

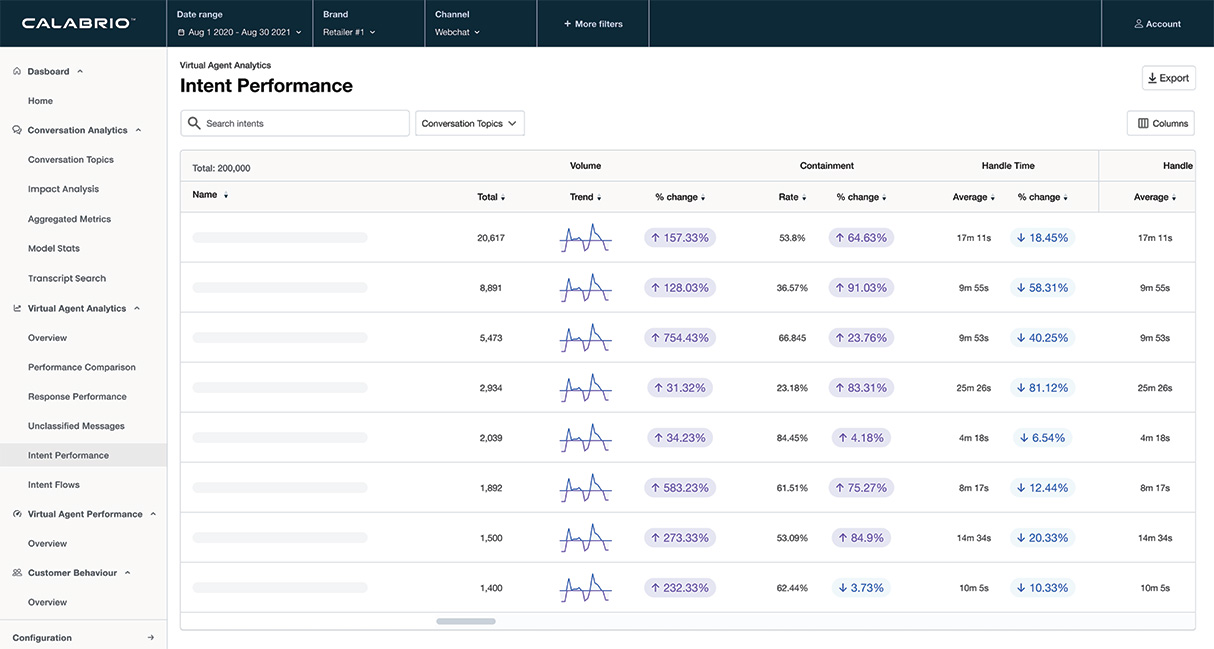

Of course, doing so can look a lot of different ways: Maybe your customers are asking questions in unexpected ways and the bot needs additional NLU training. It may be that conversations have stalled, and you can upgrade the automation by fine-tuning intents with sub-topics. Or it may simply be that a completely new topic is suddenly seeing an uptick in inquiries, and you need to quickly design and introduce new intents.

No matter the ultimate corrective measure, the underlying key to correcting performance is being able to efficiently find and fix those conversations that are causing your virtual agent to stumble. Intent discovery, the process of automatically identifying and categorizing the underlying goals or purposes behind user messages sent to a chatbot, is crucial here. But it’s not always easy.

The problem with intent and topic discovery that’s not designed for scale

Effective intent discovery is a huge challenge for chatbot teams—one that’s often hiding in plain sight.

Every day, your virtual agent fields thousands, if not tens of thousands, of inquiries every day. And for some (hopefully small) portion of those inquiries, the chatbot simply will be unable to provide a helpful answer. The work of chatbot intent and topic discovery, and then designing a work plan to fix shortcomings, is integral to the day-to-day activities of a chatbot team.

Some teams turn to SQL to help them out. Following hunches, writing queries—sometimes, it can feel like a game of Whac-a-Mole.

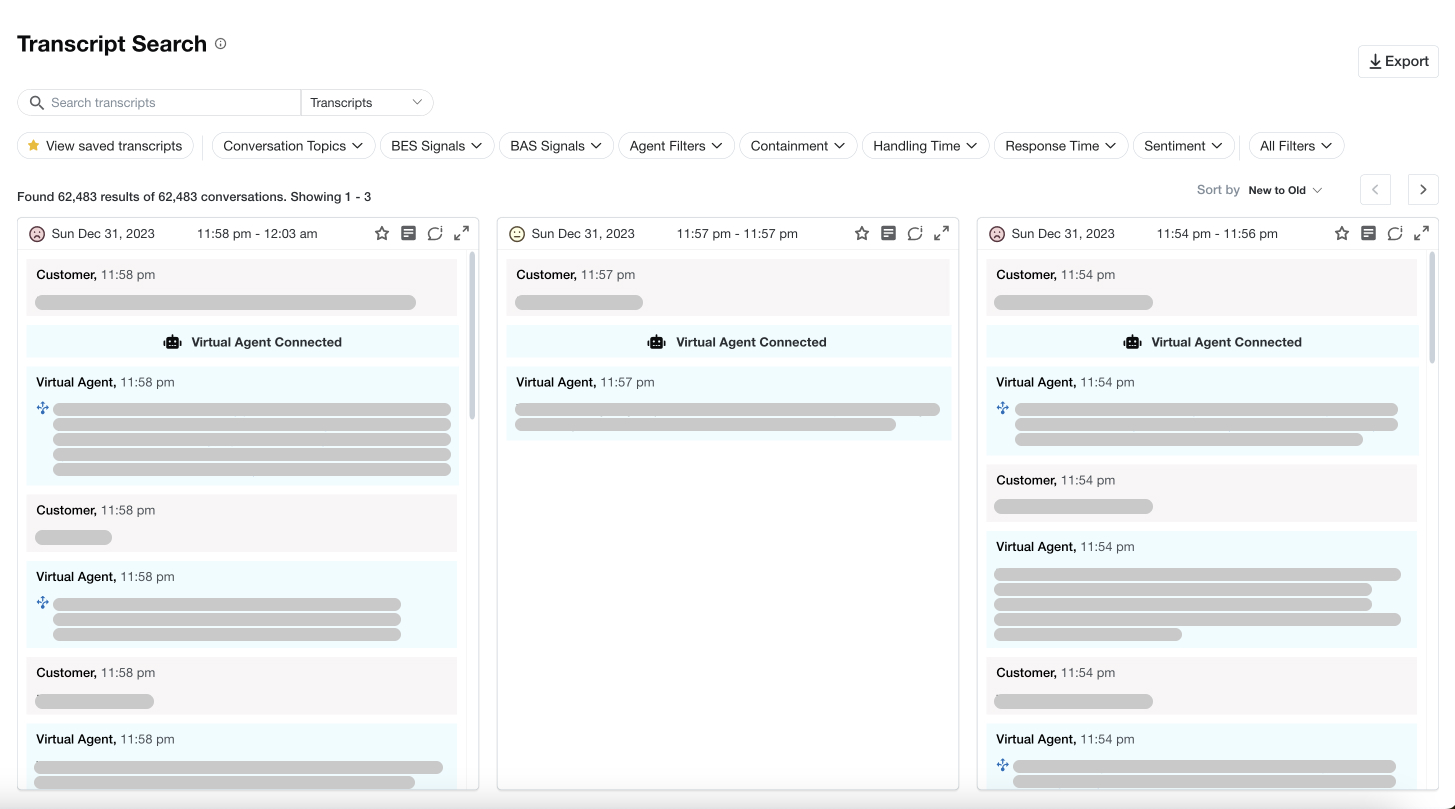

We know of at least one team that prioritized a daily task of scrolling through 100 conversations every day to find those awkward moments, manually assessing each one to evaluate whether this was an opportunity to retain the bot or build a new intent. Managing vast spreadsheets was a daily practice that consumed a lot of time and energy.

To make matters worse, the longer a virtual agent is in production and the more volume that’s driving to the bot, the harder it is to manually scrub through the data and make sense of it all. And that’s if you’re lucky enough to have access to timely conversation data. Many teams don’t and experience long wait times when attempting to get access.

Other teams rely on specialized resources with deep data science expertise: for these team members it may not be a priority, they may not always understand the ask, and when the report comes back, it’s not always usable—it often needs to be deciphered, organized, questioned. Sadly, for at least one team we know, that process took upwards of six months. What a missed opportunity to address customer inquiries and build better automated experiences!

If your virtual agent is managing conversations at scale, you simply can’t afford to wait weeks or months, continuing to serve up frustrating conversations and agent escalation.

Evolving a virtual agent to become more responsive, more thorough, and deliver the automated experience you’re looking for, requires a different approach to intent discovery.

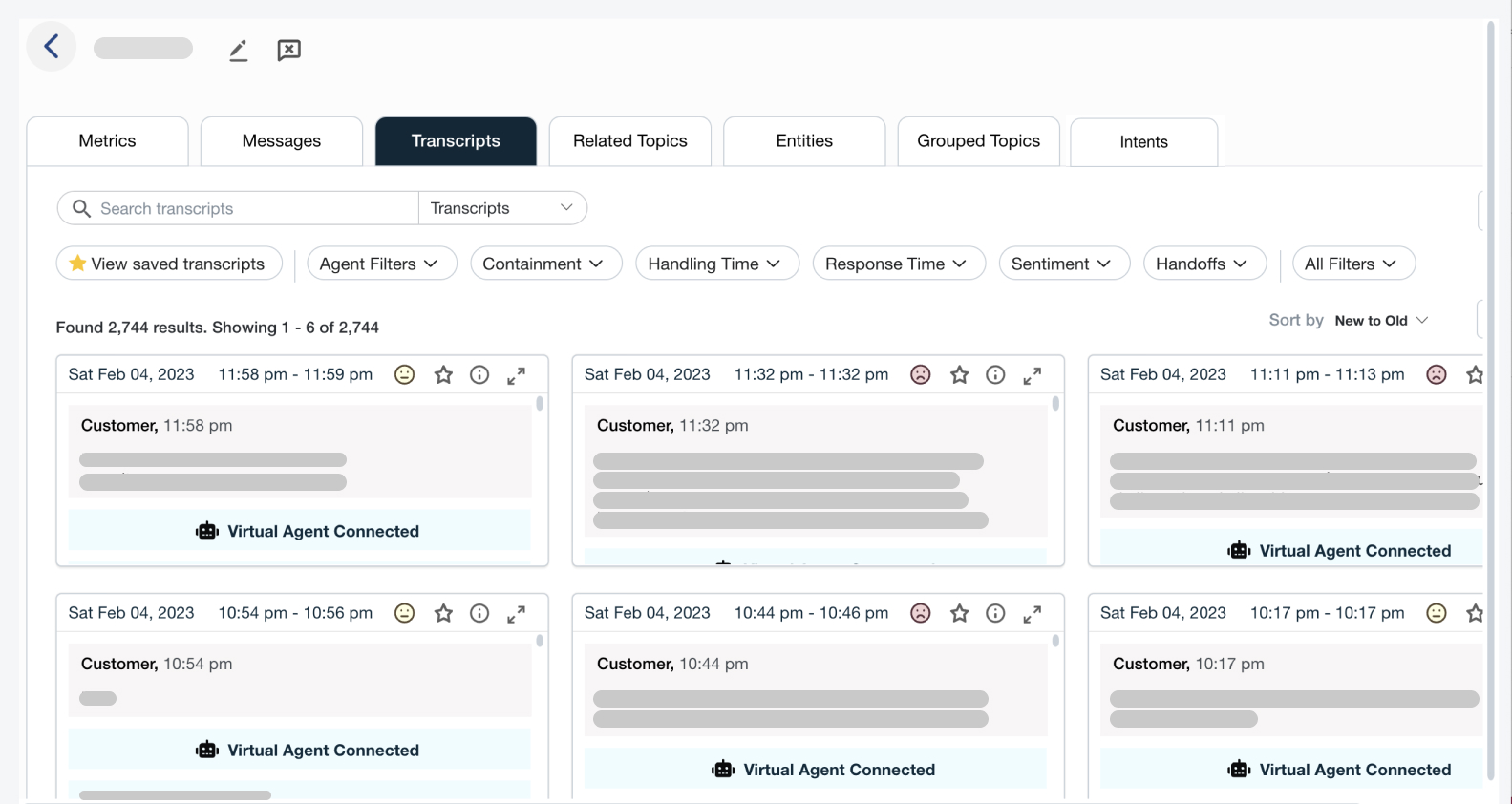

Taking the headache out of a thankless job with AI-driven intent discovery and chatbot analytics

From the newly launched to the most established, virtual agents will always field questions they are unable to answer. Conversational AI is rapidly evolving, but in practice that means that virtual agent conversations are only becoming increasingly complex. Chatbot teams need the right tools to help them zero in on unsuccessful conversations, navigate this complexity, and efficiently build better conversations.

The good news is that innovative technologies exist that easily pinpoint those difficulties, allow you to identify them as training opportunities or new intents, and then help you prioritize a worklist to address the issues.

See for yourself how Calabrio’s chatbot analytics platform can help you accelerate improvement. The Bot Analytics platform’s unique Bot Automation Score helps teams quickly identify specific topics that require investigation—simplifying the process of creating new intents and delivering better experiences. Learn more about the power of Calabrio Bot Analytics when you book a demo today.