Calabrio Bot Analytics

Improve chatbot and voice bot performance

Chatbot analytics software that empowers chatbot teams – from digital product owners, to chatbot operators, conversation designers, and digital analysts. Sophisticated chatbot analytics platform helps you monitor and improve the performance and quality of your customer service chatbots and voice bots.

Leading insights for chatbot leaders

Calabrio Bot Analytics is the top choice for chatbot teams looking to boost bot performance.

Why Calabrio Bot Analytics

Gain a deep understanding of chatbot and voicebot performance

Measure what matters

Monitor the technical metrics and translate the outcomes into business KPIs, and better communicate the health and performance of your chatbot or voice bot program.

- Cornerstone KPIs summarize how well your bots are automating every conversation, what your customers think, and how much you’re paying to have the conversation

- The most complete set of chatbot metrics on the market gives you insight and guidance to improve conversation quality and bot responsiveness.

All access pass to conversation data

Get direct access to every message – including transcripts – from chatbots, voicebots, and live agent conversations, giving you everything you need to dig in and fix performance issues, without the need for expensive analyst resources.

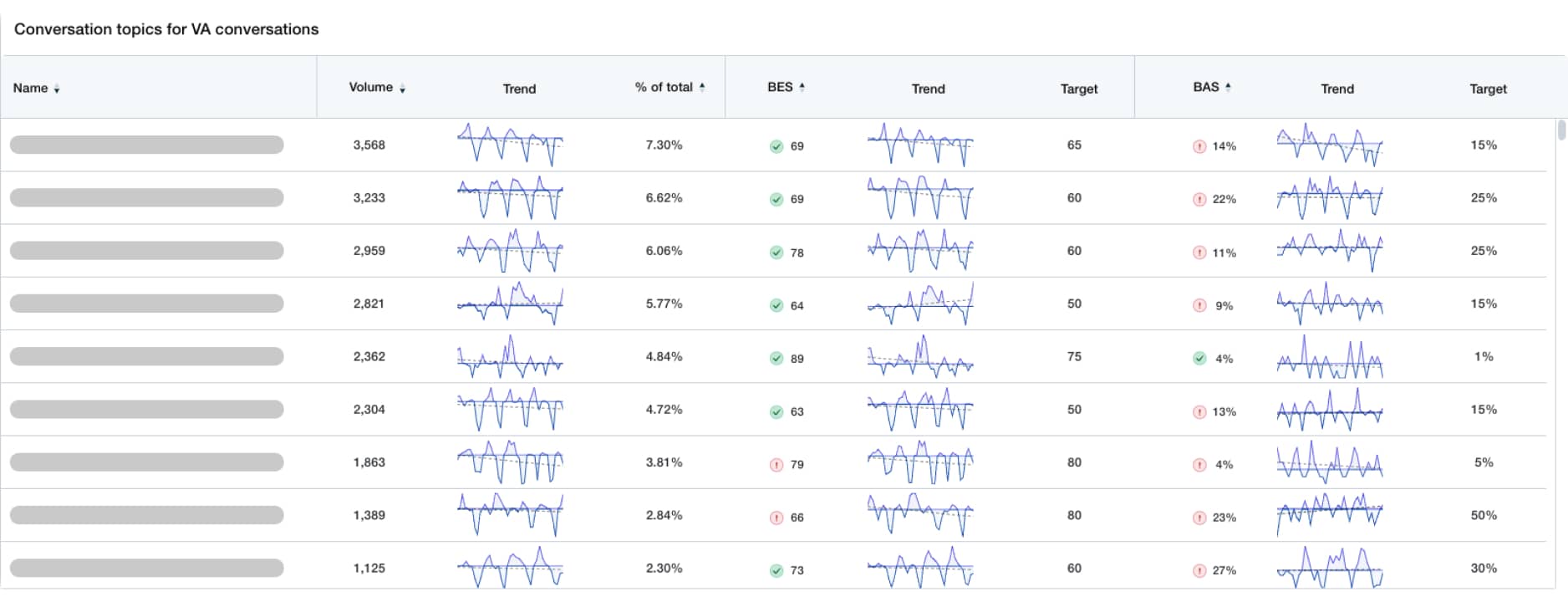

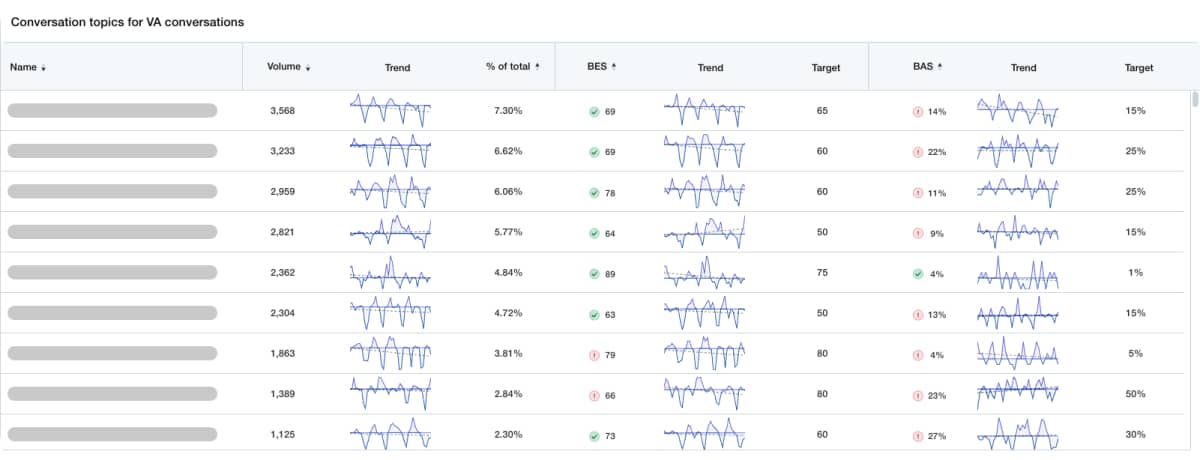

- Industry-forward AI organizes thousands of conversations into topics, making it easy to understand and diagnose.

- Go from the surface to the details in just a few clicks, without having to rely on other teams to extract the data for you.

- Use real customer messages to fix content gaps and upgrade bot responses.

One chatbot analytics platform, all conversations

Funnel conversation data from all chatbot, voice bot, and live agents into a central analytics platform.

- AI topic model automatically groups all conversations into themes, giving you digestible insights

- See overall performance or filter for channel, platform, or brand

- Data is refreshed every 24 hours, so you can see trends as they happen, and act quickly when KPIs fall out of range.

Measure what matters

Monitor the technical metrics and translate the outcomes into business KPIs, and better communicate the health and performance of your chatbot or voice bot program.

- Cornerstone KPIs summarize how well your bots are automating every conversation, what your customers think, and how much you’re paying to have the conversation

- The most complete set of chatbot metrics on the market gives you insight and guidance to improve conversation quality and bot responsiveness.

All access pass to conversation data

Get direct access to every message – including transcripts – from chatbots, voicebots, and live agent conversations, giving you everything you need to dig in and fix performance issues, without the need for expensive analyst resources.

- Industry-forward AI organizes thousands of conversations into topics, making it easy to understand and diagnose.

- Go from the surface to the details in just a few clicks, without having to rely on other teams to extract the data for you.

- Use real customer messages to fix content gaps and upgrade bot responses.

One chatbot analytics platform, all conversations

Funnel conversation data from all chatbot, voice bot, and live agents into a central analytics platform.

- AI topic model automatically groups all conversations into themes, giving you digestible insights

- See overall performance or filter for channel, platform, or brand

- Data is refreshed every 24 hours, so you can see trends as they happen, and act quickly when KPIs fall out of range.

Analytics tools to elevate all your conversations

Ditch the guesswork with real data insights.

Make smart decisions with unprecedented visibility into conversation performance and chatbot responsiveness.

Conversation insights, at your fingertips

See every conversation from your automated chatbot or voice bot, all the way to the live agent, to make sure your customers are getting the answers – and the experience – they came for.

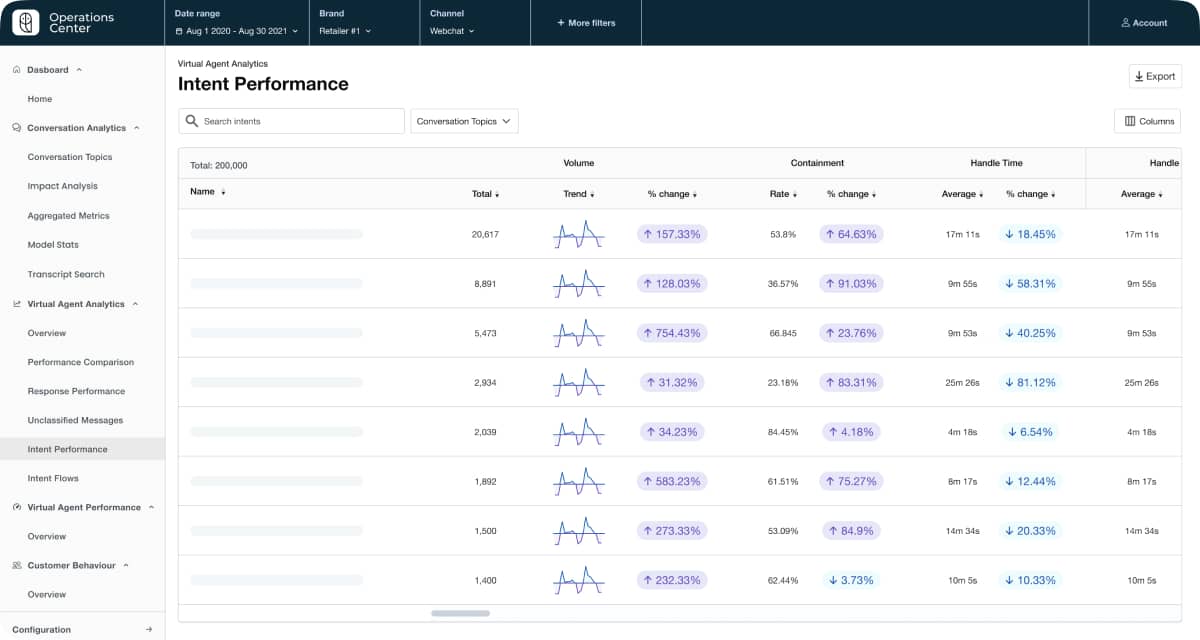

Your window into AI chatbot or voice bot responsiveness

Get fast answers on intent configuration, NLU model performance, and bot responses, to quickly zero in on what’s working – and what needs to be improved.

Connect to your conversational ecosystem

Get the most out of your chatbot data by connecting Calabrio Bot Analytics with your conversational ecosystem.

Chatbot analytics software that works well with bot development platforms including Google Dialogflow, Kore.ai, Microsoft PowerVA, Salesforce Einstein, Amazon Lex, IBM Watson, and dozens of others. It also connects with CCAS platforms such as Genesys, Five9, Avaya, and others.

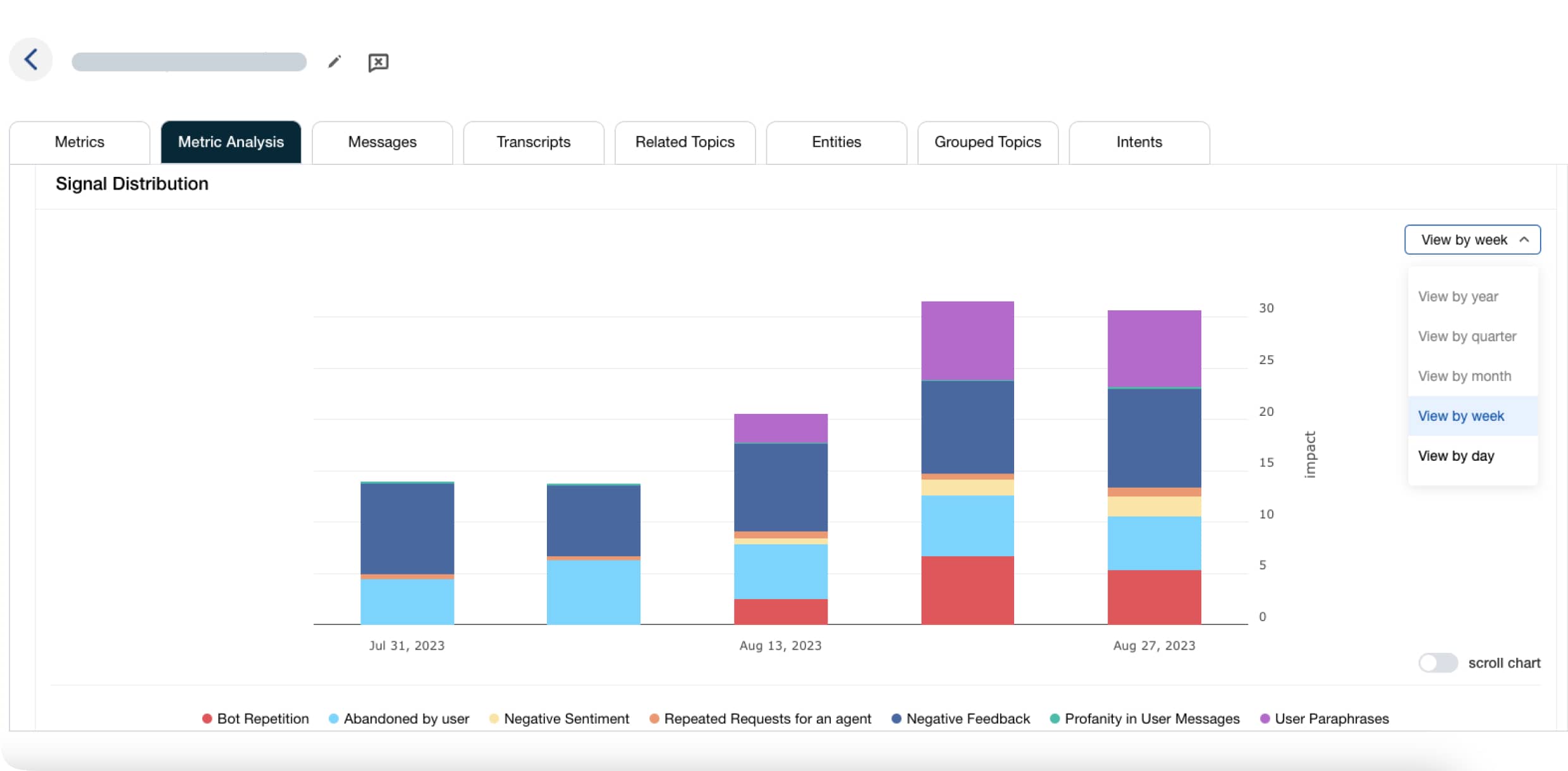

4 cornerstone chatbot metrics

- Monitor the technical metrics and translate the outcomes into business KPIs, and better communicate the health and performance of your bot program.

- Each score is determined automatically using signals found within every conversation, and signal analysis tools help you understand the reasons behind performance downgrades, making it easy to fix performance issues.

Did the bot solve the customer’s problem? More than containment, the bot automation score tells you whether the bot was effective in getting the customer the information they came for.

How did the customer feel about their experience – did they like it or not? Go deeper than surveys, and use sentiment analysis, natural language understanding and other signals to automatically determine the customer experience.

The promise of a chatbot is cost savings, so we give you clarity on how much you’re paying across all your conversations.

Calabrio Bot Analytics helps you know which topics could be automated, contributing to overall cost savings.

Expert-vetted chatbot analytics

Fast Moving Chatbot teams rely on Calabrio to improve bot performance.

Features that give you more

Calabrio Bot Analytics helps you uncover what’s really happening in your virtual agent conversations.

With access to every transcript, and over 200 metrics unique to the performance of virtual agents, you get all the information you need to improve the quality and performance of your conversational AI bot.

Works with intent-based bots.

Conversational data is ingested via a secure API, and refreshed every 24 hours.

AI-based topic model organizes every conversation into thematic groups, for easy discovery and analysis.

Conversation data is redacted at ingest, and irreversible to the original state.

Currently available for English & French, please contact us for other languages.

Direct connections to data warehouse available.

Impact by the numbers

Calabrio Bot Analytics helps chatbot teams accelerate bot performance improvement. Companies who choose Calabrio see results in as little as 6 months:

Fix frustrating bot issues

Does this sound familiar? Calabrio Bot Analytics can help.

Is the chatbot saving money in our contact center operations?

What do I tackle first?

Getting access to the data is too complicated and takes too long!

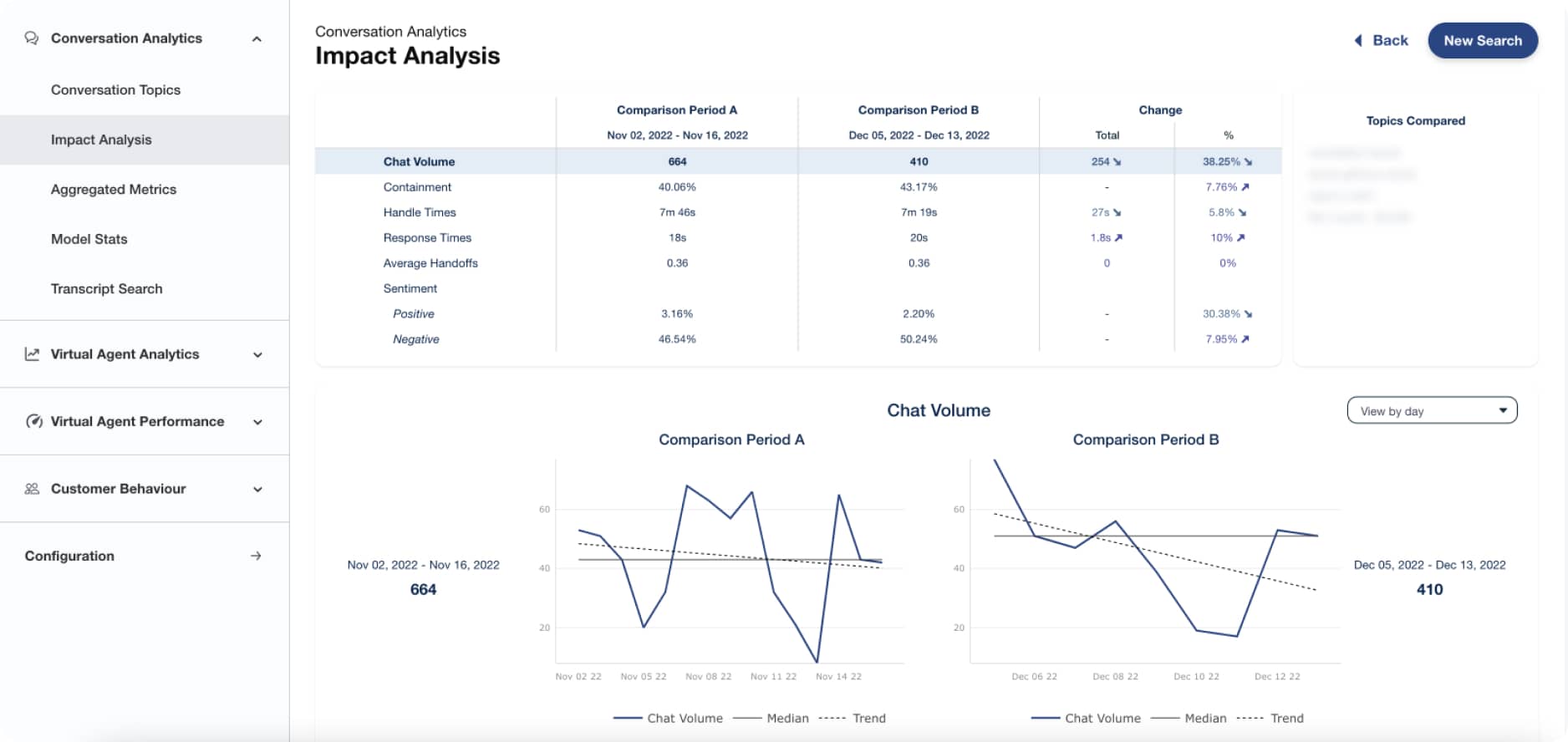

Are the changes I’m making having any impact?

Where is my bot struggling?

My bot is underperforming, I need to swap out the platform!

See it in action

Ready to see Calabrio Bot Analytics for yourself?