The chatbot performance metrics every team should be measuring

The chatbot performance metrics every team should be measuring

The beauty (and, sometimes, the burden) of virtual agents is that they’re never finished. You and your conversational chatbots can always learn something new—whether you launched them yesterday or 5 years ago. However, uncovering the right insights and being able to implement changes that have real impact is incumbent upon looking at the right aspects of performance.

To help you make the most of your bot performance and your customer experience, we’ve compiled a list of essential chatbot performance metrics you need to know—and be able to monitor—to evaluate and improve chatbot performance.

Essential Chatbot Performance Metrics: 4 Core KPIs for Bot Evaluation

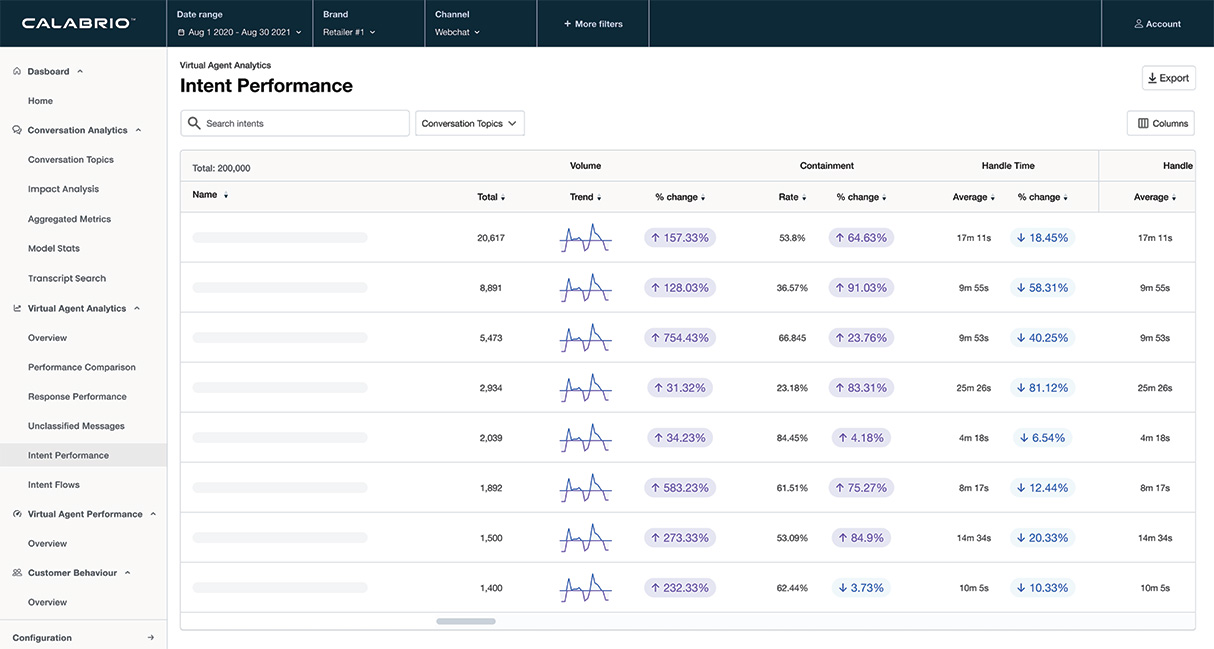

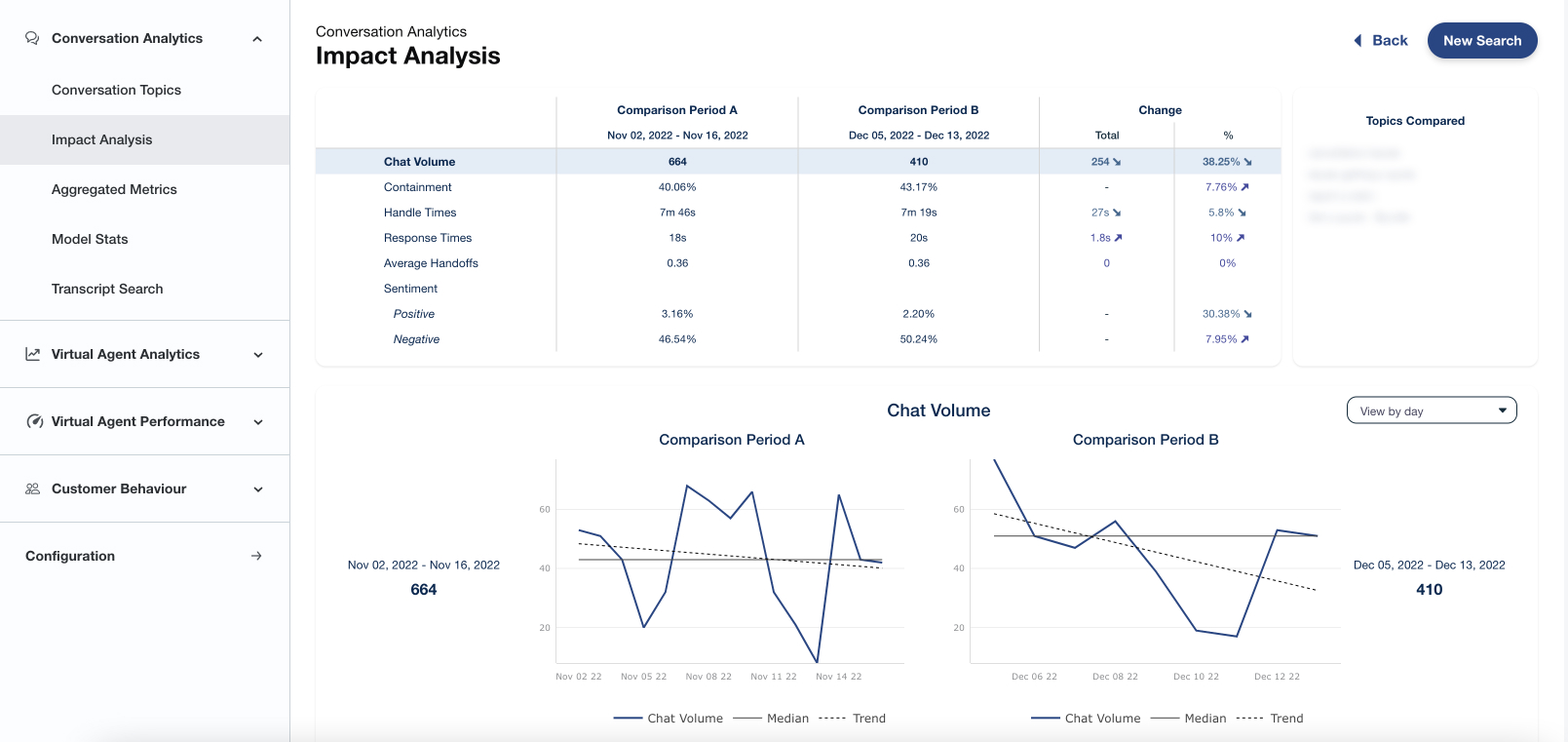

When trying to measure the effectiveness of your conversational chatbots, there are metrics and then there are metrics. These four cornerstone chatbot metrics, which can all be monitored with ease using Calabrio Bot Analytics, are indispensable in your pursuit of better bot performance.

1. Bot Experience Score

Measuring a customer’s experience when they’re interacting with a chatbot can be a challenge. For one, explicit feedback is rare, and it’s often biased toward the negative. Meanwhile, surveys typically provide a very narrow view of actual engagement. This is due to a couple of reasons: participation tends to be low, so you’re often working with a small sample size. Plus, those that do participate aren’t always authentic in their responses (if they’re incentivized, for example), thereby introducing unintended bias.

To understand customer experience and measure satisfaction without surveys, Calabrio has settled on a standard Bot Experience Score (BES) that can be used on any bot. This crucial chatbot KPI takes into account all customer conversations to produce an unbiased score and a more accurate view of overall customer satisfaction. This score purely measures the experience, not solely the effectiveness of the bot.

The BES metric starts with a score of 100 and drops every time there is a negative engagement signal within the bot. The negative signals used in the BES include:

- Bot repetition occurred when the bot repeats itself for any reason during a conversation.

- Customer paraphrase occurred when the customer uses a similar query twice or more in a conversation.

- Abandonment occurred when the customer left the conversation mid-journey and did not reach a bot endpoint.

- Negative sentiment is detected using an AI-based sentiment model.

- Negative explicit feedback is received in the conversation.

- Profanity is present in the conversation.

- The customer used the word “agent” (or similar) more than once in a conversation. Note that using “agent” once and being directly escalated is not generally a bad experience.

The BES is based on an analysis of all conversations over a given period of time and reduces the score for negative experience signals that are common in all virtual agents. If a conversation has one negative signal it receives a score of 75, if two negative signals a score of 50, and if three signals or more a score of zero. All conversations are given a score, and the average is used.

Using this formula across all conversations in a given period of time results in a very clear customer experience score. Further, breaking down the Bot Engagement Score by customer contact reason makes it actionable.

2. Bot Automation Score

The next most important metric for any bot program is how often the bot can satisfy the customer’s needs without the need for escalation to a live agent. We call this the Bot Automation Score (BAS).

The BAS is a binary metric that looks at whether the conversation was either fully automated or wasn’t. In contrast to the Bot Experience Score, BAS is not quite a measure of the experience itself, but rather how effective the bot is at completing tasks.

In our experience, having analyzed the performance of many bots, the most accurate measure of automation is derived using a formula that looks at negative signals. As a result, the Bot Automation score starts with all conversations in a given period of time and is reduced based on the negative signals. The negative signals used in the BAS are:

- The customer did not reach a bot endpoint, which is one of the final steps in a bot journey.

- The customer escalated to a live agent for any reason.

- The customer submitted any type of explicit negative feedback.

- The bot recorded a false positive.

- The customer requested an agent using any “agent”-like word, but wasn’t escalated to an agent.

- “Bad Containment” occurred when the conversation was not escalated, but the topic was one that we know the bot is not effective at automating.

If a conversation includes any negative signal from the list above, it is considered not to be automated.

Using this formula across all conversations in a given period of time results in a very clear measure of automation. This can also be evaluated by contact reasons to deliver more granular insight into chatbot performance evaluation and actionable information. With this negative score, you not only get a very conservative view of bot effectiveness, but it also quickly becomes obvious what actions can be taken to increase overall automation rates.

3. Cost per Automated Conversation

In the contact center industry, cost per call is a typical KPI that sheds light on the overall performance and efficiency of the call center. However, as bots take on increasingly prominent roles in today’s organizations, it’s crucial that call centers understand the cost per automated conversation—and can get to the heart of their automation’s ROI.

Cost per Automated Conversation may seem as simple as dividing bot platform costs and license fees by the total number of automated conversations. However, this approach overlooks important performance-based factors; for example, whether a bot engagement actually fulfills customer requirements, or whether it prompted them to switch to a more costly channel—a live chat or agent—leading an increase in overall cost.

Ultimately, a chatbot analytics platform should account for three main factors when calculating cost per automated conversation:

- Platform fees, including licensing and implementation

- Conversation volume

- Automation. Beyond simple containment, automation takes into account whether a bot was able to genuinely satisfy a customer query without repetition, excessive transitions, or escalations.

4. Agent Experience Score

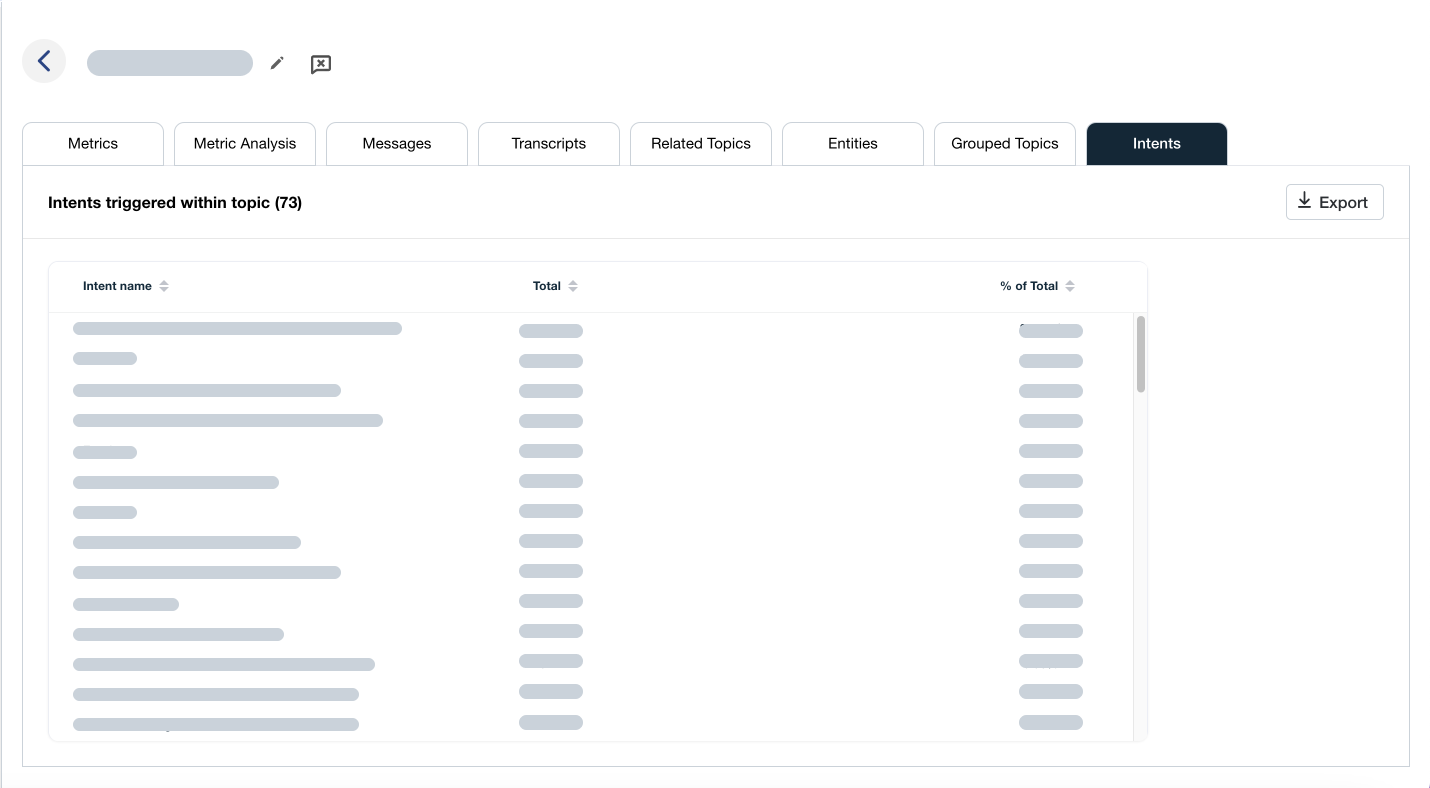

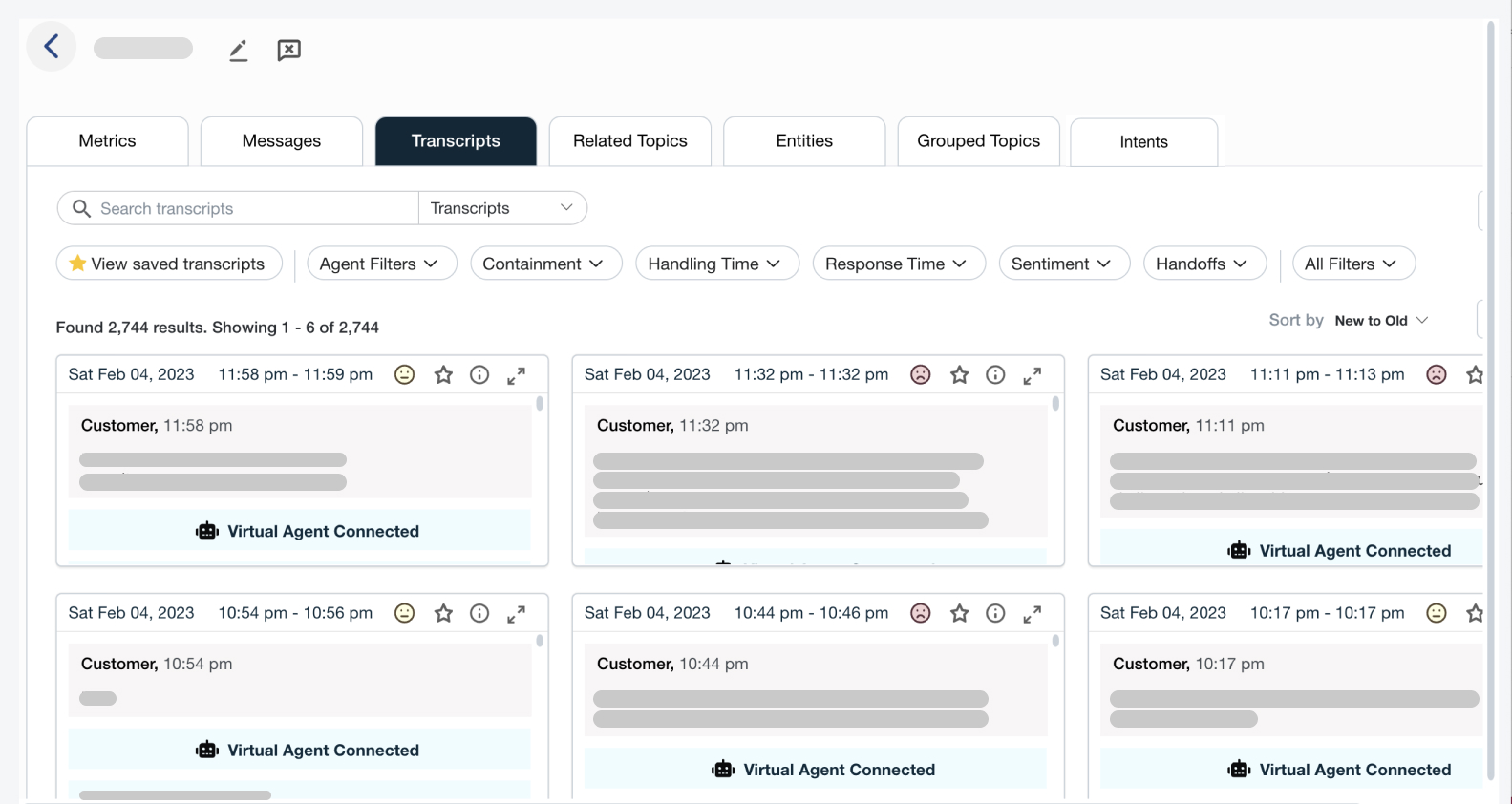

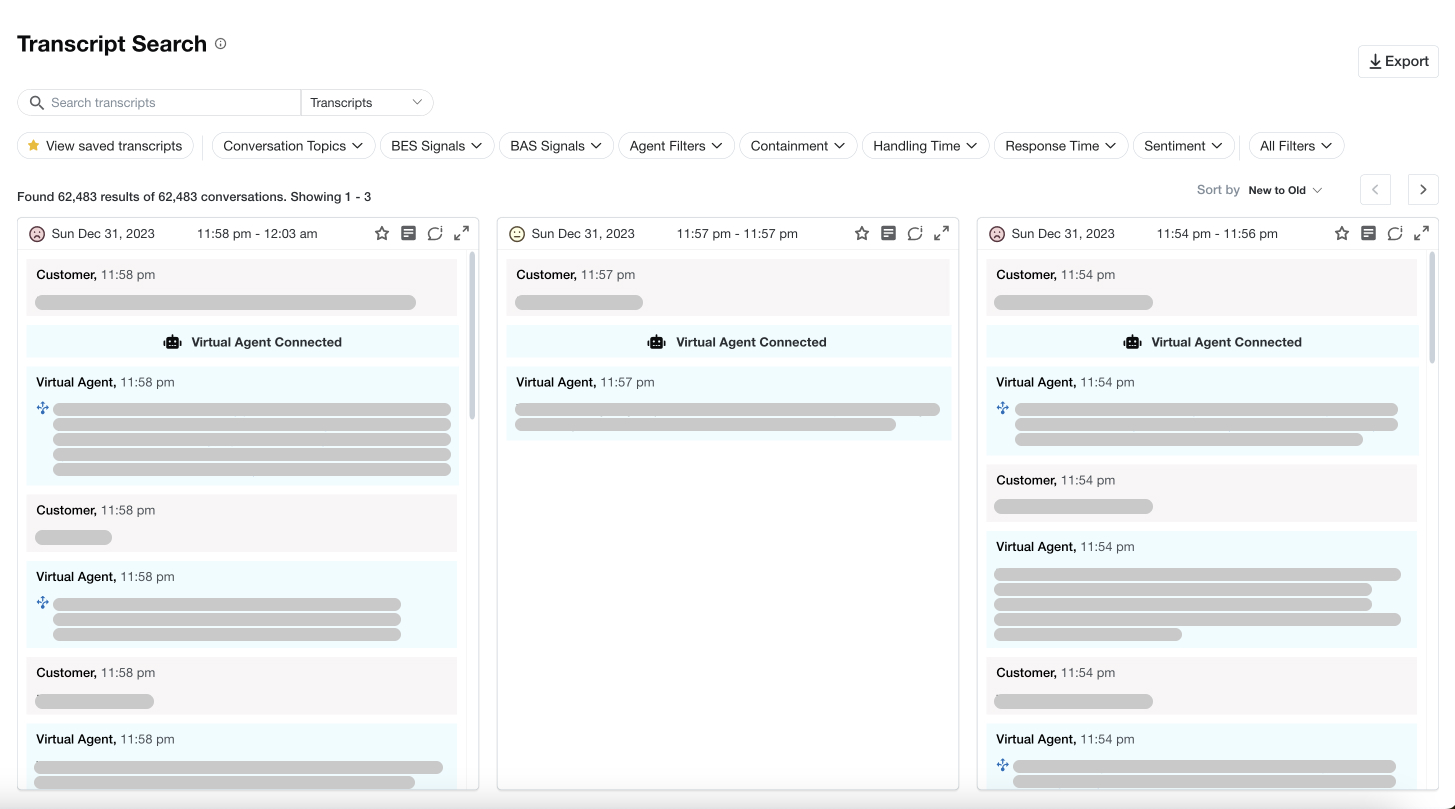

How do you understand what happens when a virtual conversation progresses to a live agent? In all likelihood, you’ll need the help of artificial intelligence to get a closer look. Calabrio Bot Analytics uniquely calculates an Agent Experience Score metric that looks at signals within conversation data such as agent abandonment, wait time, handle time, and sentiment to offer global oversight and local insight into overall performance as well as individual topics that would benefit from automation.

Beyond the Core: Additional Chatbot Metrics to Understand Effectiveness

The core chatbot KPIs outlined above can be crucial to understanding and improving bot performance. However, they’re far from the only available to teams with the right analytics at their disposal. The following chatbot success metrics can help teams zero in on more minute issues to drive further improvement.

5. False Positive Rate

The false positive rate is a measure of the rate that an utterance is classified by the model incorrectly although the model gives it a high confidence level. This is a difficult rate to measure and relies on an independent parallel NLP model, however lower false positive rates typically mean that the natural language understanding (NLU) set up for a chatbot is of good quality.

6. Bot Repetition Rate

Bot repetition is used in the Bot Experience Score (BES) but is also a good independent measure of performance for all conversational bots. A virtual agent theoretically should never repeat itself, but this still happens regularly. Being able to identify and address these occurrences will lead to quick improvements.

7. Positive Feedback Rate

Negative feedback is given in almost all situations at a multiple of positive feedback. The positive feedback rate is the rate of positive feedback divided by the total amount of feedback (positive, negative, neutral) to get a more useful rate.

8. NLU (Natural-Language Understanding) Rate

The NLU rate is a common metric in the virtual agent industry. It is simply a measure of the rate that a classifier can match an utterance to a known intent at a given confidence level.

Getting to the heart of bot performance with chatbot analytics

Now that you know the most important chatbot metrics to monitor, how do you actually do so?

Many bot platforms are aligned with bot design and development but don’t offer a level of analytics that can deliver deep, impactful measurement of bot performance.

That’s where bot analytics software comes in. While a mature chatbot platform will easily provide all the events and signals required to produce the scores, an advanced chatbot analytics solution like Calabrio Bot Analytics will be able to provide deliver the clearest picture of bot quality.

Learn more about how our Bot Analytics platform can help you gain a deep understanding of chatbot and voicebot performance. And book a demo today to see Bot Analytics in action for yourself.